Context Compression in AI Agents Is Not a Hack - It's a Cognitive Reset Mechanism

Why long-running coding agents degrade over time - and how controlled forgetting makes them smarter

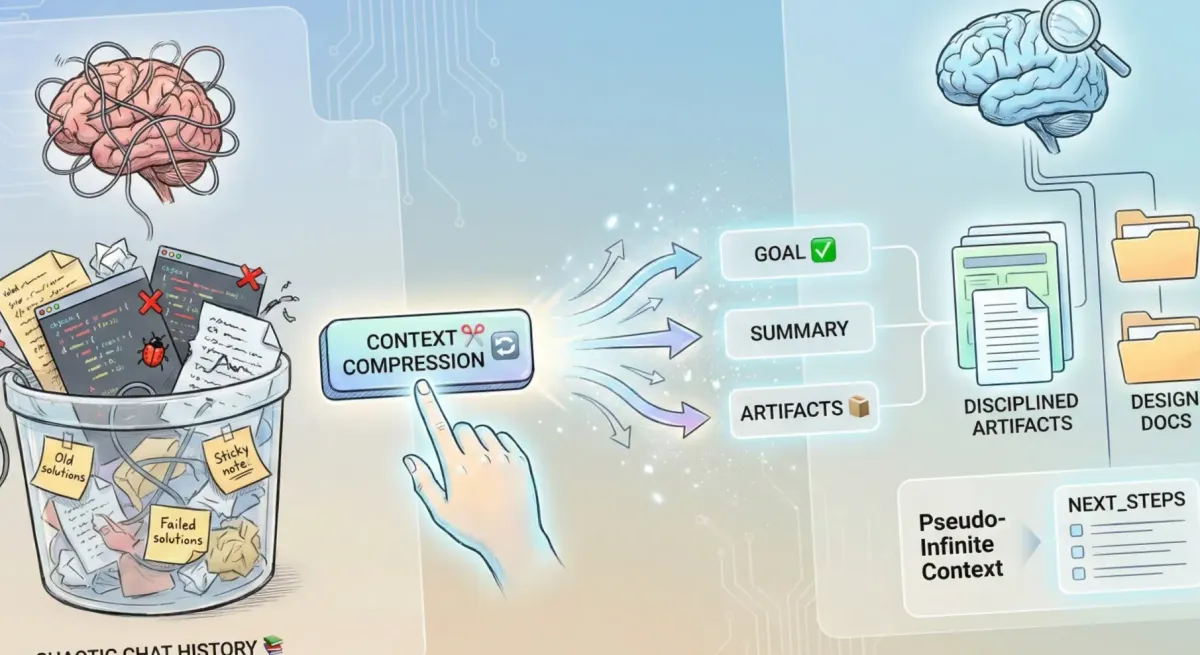

Introduction: the hidden failure mode of long-running agents

If you run a coding agent on the same project for weeks, something subtle but destructive happens.

The chat history slowly turns into a massive few-shot database:

- successful solutions

- failed experiments

- half-working patches

- abandoned ideas that almost worked

From the model's perspective, all of this sits nearby in the context window - equally "relevant", equally "valid".

What looks like experience is actually cognitive noise.

At some point, the agent stops improving.

Not because it's weak - but because it's remembering too much.

This is where context compression enters the picture.

Not as a workaround.

Not as a hack.

But as a deliberate reset of the agent's working memory.

Why long context turns into junk

The common intuition is:

"More context = more intelligence".

In practice, the opposite often happens.

Long-running chats accumulate:

- outdated assumptions

- failed approaches that still look plausible

- local optima that were correct at the time but are now wrong

The model doesn't know which parts of the history are obsolete.

It only knows proximity and token probability.

As a result:

- old patterns get reproduced

- mistakes become reinforced

- the agent starts "thinking in circles"

This isn't a model limitation.

It's a memory management problem.

Context compression is not forgetting - it's distillation

When people hear "context compression", they imagine loss.

That's the wrong mental model.

Good compression does three things at once:

1/ Removes noise

Failed paths, abandoned ideas, redundant back-and-forth.

2/ Preserves intent

The current goal, constraints, and direction of the project.

3/ Encodes conclusions, not history

What was learned - not how painfully it was learned.

In other words:

compression replaces raw memory with structured understanding.

A practical compression schema: GOAL, SUMMARY, ARTIFACTS

A reliable compression pass usually produces three components:

1. GOAL

What the agent is currently trying to achieve.

Not vague motivation - but an operational objective.

2. SUMMARY

A distilled description of:

- what has been tried

- what worked

- what failed

- what constraints matter

This is not a log.

It's an executive brief.

3. ARTIFACTS

Everything that must persist outside the chat:

- source code

- configs

- specs

- decisions frozen in files

Artifacts replace memory.

They don't compete with it.

Why artifacts beat infinite context

An agent with:

- a short, clean context

- and strong external artifacts

is more effective than one drowning in chat history.

Artifacts are:

- explicit

- stable

- non-probabilistic

They act as externalized cognition.

Instead of forcing the model to remember, you let it read.

This is how we scale agent work from hours to weeks without degradation.

The paradox: agents often get smarter after compression

This surprises many people the first time they see it.

After a compression reset:

- hallucinations drop

- reasoning becomes sharper

- solutions converge faster

Why?

Because the agent is no longer anchored to:

- early wrong guesses

- outdated framing

- accidental local patterns

You didn't erase intelligence.

You removed interference.

Practical guidelines for long-running agent work

If you work with coding agents seriously, a few rules emerge quickly:

- Treat chat history as working memory, not long-term storage.

- Compress aggressively when progress slows or loops appear.

- Store knowledge in artifacts, not conversations.

- Reset context without guilt - it's a feature, not a failure.

- Design your workflow around intent preservation, not transcript preservation.

Conclusion: stop hoarding tokens, start managing cognition

Context compression isn't about saving tokens.

It's about respecting a fundamental constraint:

attention is finite - even for models.

Once you treat agents as cognitive systems rather than chatbots, compression stops feeling destructive and starts feeling like good engineering.

Try it in your next long-running project.

Clear the junk.

Keep the intent.

Let the agent think again.

Originally explored as a thread on X. Expanded here for depth and clarity.